license: cc-by-4.0

language:

- en

- zh

viewer: true

configs:

- config_name: default

data_files:

- split: val

path: data/**/*.jsonl

ScienceMetaBench

🤗 HuggingFace Dataset | 💻 GitHub Repository

ScienceMetaBench is a benchmark dataset for evaluating the accuracy of metadata extraction from scientific literature PDF files. The dataset covers three major categories: academic papers, textbooks, and ebooks, and can be used to assess the performance of Large Language Models (LLMs) or other information extraction systems.

📊 Dataset Overview

Data Types

This benchmark includes three types of scientific literature:

Papers

- Mainly from academic journals and conferences

- Contains academic metadata such as DOI, keywords, etc.

Textbooks

- Formally published textbooks

- Includes ISBN, publisher, and other publication information

Ebooks

- Digitized historical documents and books

- Covers multiple languages and disciplines

Data Batches

This benchmark has undergone two rounds of data expansion, with each round adding new sample data:

data/

├── 20250806/ # First batch (August 6, 2024)

│ ├── ebook_0806.jsonl

│ ├── paper_0806.jsonl

│ └── textbook_0806.jsonl

└── 20251022/ # Second batch (October 22, 2024)

├── ebook_1022.jsonl

├── paper_1022.jsonl

└── textbook_1022.jsonl

Note: The two batches of data complement each other to form a complete benchmark dataset. You can choose to use a single batch or merge them as needed.

PDF Files

The pdf/ directory contains the original PDF files corresponding to the benchmark data, with a directory structure consistent with the data/ directory.

File Naming Convention: All PDF files are named using their SHA256 hash values, in the format {sha256}.pdf. This naming scheme ensures file uniqueness and traceability, making it easy to locate the corresponding source file using the sha256 field in the JSONL data.

📝 Data Format

All data files are in JSONL format (one JSON object per line).

Academic Paper Fields

{

"sha256": "SHA256 hash of the file",

"doi": "Digital Object Identifier",

"title": "Paper title",

"author": "Author name",

"keyword": "Keywords (comma-separated)",

"abstract": "Abstract content",

"pub_time": "Publication year"

}

Textbook/Ebook Fields

{

"sha256": "SHA256 hash of the file",

"isbn": "International Standard Book Number",

"title": "Book title",

"author": "Author name",

"abstract": "Introduction/abstract",

"category": "Classification number (e.g., Chinese Library Classification)",

"pub_time": "Publication year",

"publisher": "Publisher"

}

📖 Data Examples

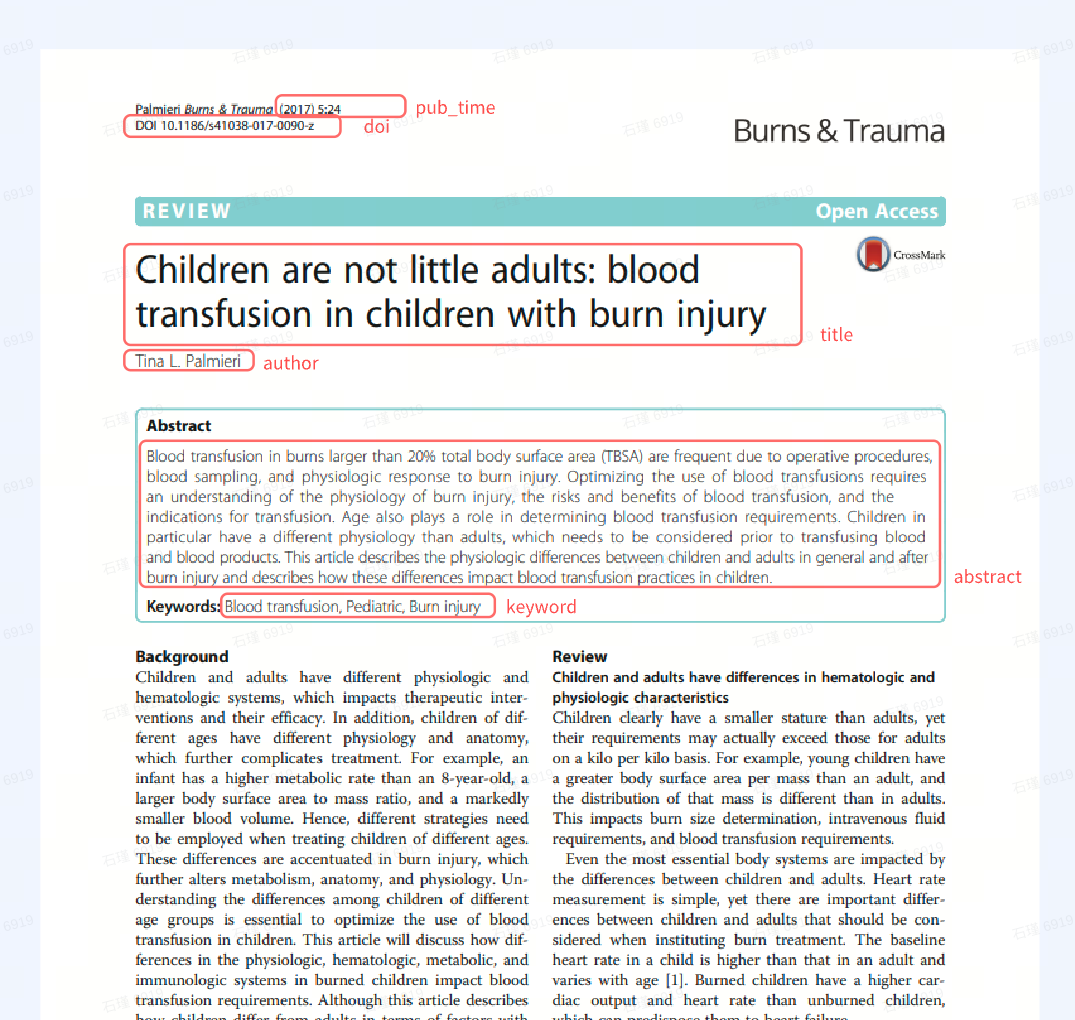

Academic Paper Example

The following image shows an example of metadata fields extracted from an academic paper PDF:

As shown in the image, the following key information needs to be extracted from the paper's first page:

- DOI: Digital Object Identifier (e.g.,

10.1186/s41038-017-0090-z) - Title: Paper title

- Author: Author name

- Keyword: List of keywords

- Abstract: Paper abstract

- pub_time: Publication time (usually the year)

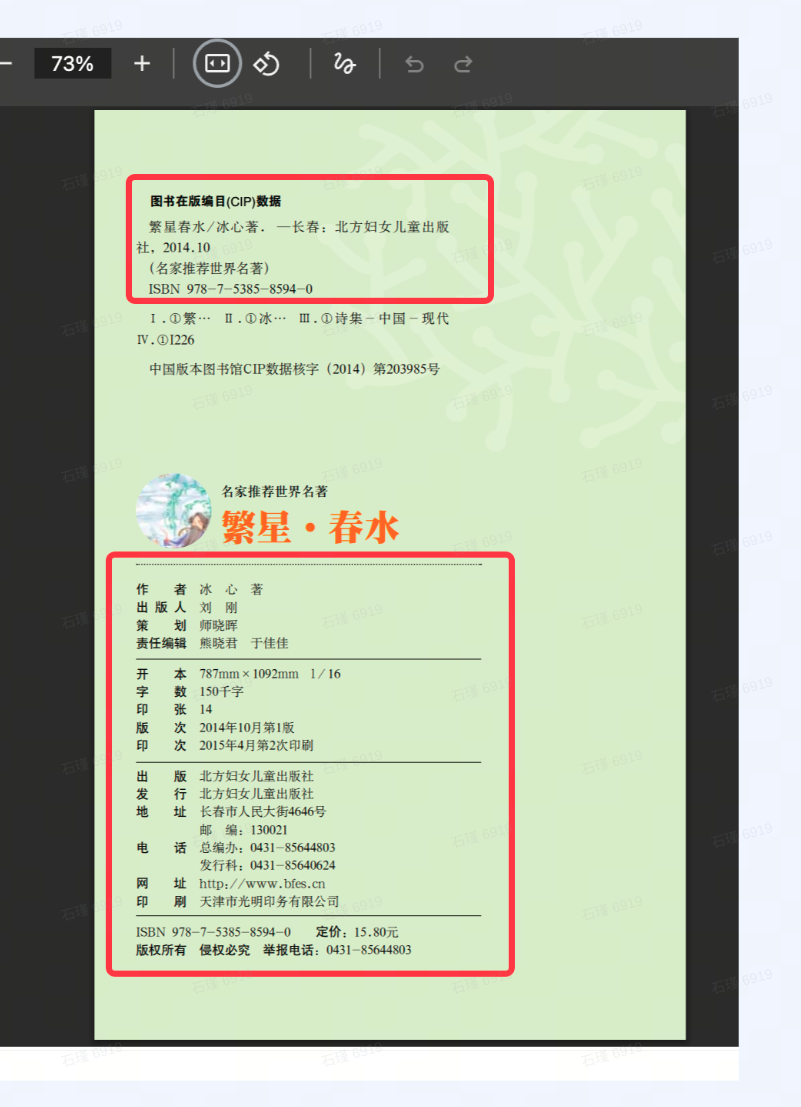

Textbook/Ebook Example

The following image shows an example of metadata fields extracted from the copyright page of a Chinese ebook PDF:

As shown in the image, the following key information needs to be extracted from the book's copyright page:

- ISBN: International Standard Book Number (e.g.,

978-7-5385-8594-0) - Title: Book title

- Author: Author/editor name

- Publisher: Publisher name

- pub_time: Publication time (year)

- Category: Book classification number

- Abstract: Content introduction (if available)

These examples demonstrate the core task of the benchmark test: accurately extracting structured metadata information from PDF documents in various formats and languages.

📊 Evaluation Metrics

Core Evaluation Metrics

This benchmark uses a string similarity-based evaluation method, providing two core metrics:

Similarity Calculation Rules

This benchmark uses a string similarity algorithm based on SequenceMatcher, with the following specific rules:

- Empty Value Handling: One is empty and the other is not → similarity is 0

- Complete Match: Both are identical (including both being empty) → similarity is 1

- Case Insensitive: Convert to lowercase before comparison

- Sequence Matching: Use longest common subsequence algorithm to calculate similarity (range: 0-1)

Similarity Score Interpretation:

1.0: Perfect match0.8-0.99: Highly similar (may have minor formatting differences)0.5-0.79: Partial match (extracted main information but incomplete)0.0-0.49: Low similarity (extraction result differs significantly from ground truth)

1. Field-level Accuracy

Definition: The average similarity score for each metadata field.

Calculation Method:

Field-level Accuracy = Σ(similarity of that field across all samples) / total number of samples

Example: Suppose evaluating the title field on 100 samples, the sum of title similarity for each sample divided by 100 gives the accuracy for that field.

Use Cases:

- Identify which fields the model performs well or poorly on

- Optimize extraction capabilities for specific fields

- For example: If

doiaccuracy is 0.95 andabstractaccuracy is 0.75, the model needs improvement in extracting abstracts

2. Overall Accuracy

Definition: The average of all evaluated field accuracies, reflecting the model's overall performance.

Calculation Method:

Overall Accuracy = Σ(field-level accuracies) / total number of fields

Example: Evaluating 7 fields (isbn, title, author, abstract, category, pub_time, publisher), sum these 7 field accuracies and divide by 7.

Use Cases:

- Provide a single quantitative metric for overall model performance

- Facilitate horizontal comparison between different models or methods

- Serve as an overall objective for model optimization

Using the Evaluation Script

compare.py provides a convenient evaluation interface:

from compare import main, write_similarity_data_to_excel

# Define file paths and fields to compare

file_llm = 'data/llm-label_textbook.jsonl' # LLM extraction results

file_bench = 'data/benchmark_textbook.jsonl' # Benchmark data

# For textbooks/ebooks

key_list = ['isbn', 'title', 'author', 'abstract', 'category', 'pub_time', 'publisher']

# For academic papers

# key_list = ['doi', 'title', 'author', 'keyword', 'abstract', 'pub_time']

# Run evaluation and get metrics

accuracy, key_accuracy, detail_data = main(file_llm, file_bench, key_list)

# Output results to Excel (optional)

write_similarity_data_to_excel(key_list, detail_data, "similarity_analysis.xlsx")

# View evaluation metrics

print("Field-level Accuracy:", key_accuracy)

print("Overall Accuracy:", accuracy)

Output Files

The script generates an Excel file containing detailed sample-by-sample analysis:

sha256: File identifier- For each field (e.g.,

title):llm_title: LLM extraction resultbenchmark_title: Benchmark datasimilarity_title: Similarity score (0-1)

📈 Statistics

Data Scale

First Batch (20250806):

- Ebooks: 70 records

- Academic Papers: 70 records

- Textbooks: 71 records

- Subtotal: 211 records

Second Batch (20251022):

- Ebooks: 354 records

- Academic Papers: 399 records

- Textbooks: 46 records

- Subtotal: 799 records

Total: 1010 benchmark test records

The data covers multiple languages (English, Chinese, German, Greek, etc.) and multiple disciplines, with both batches together providing a rich and diverse set of test samples.

🎯 Application Scenarios

- LLM Performance Evaluation: Assess the ability of large language models to extract metadata from PDFs

- Information Extraction System Testing: Test the accuracy of OCR, document parsing, and other systems

- Model Fine-tuning: Use as training or fine-tuning data to improve model information extraction capabilities

- Cross-lingual Capability Evaluation: Evaluate the model's ability to process multilingual literature

🔬 Data Characteristics

- ✅ Real Data: Real metadata extracted from actual PDF files

- ✅ Diversity: Covers literature from different eras, languages, and disciplines

- ✅ Challenging: Includes ancient texts, non-English literature, complex layouts, and other difficult cases

- ✅ Traceable: Each record includes SHA256 hash and original path

📋 Dependencies

pandas>=1.3.0

openpyxl>=3.0.0

Install dependencies:

pip install pandas openpyxl

🤝 Contributing

If you would like to:

- Report data errors

- Add new evaluation dimensions

- Expand the dataset

Please submit an Issue or Pull Request.

📧 Contact

If you have questions or suggestions, please contact us through Issues.

Last Updated: December 26, 2025